Yves right here. Rajiv Sethi describes a captivating, large-scale research of social media conduct. It checked out “poisonous content material” which is presumably precise or awfully shut to what’s usually known as hate speech. It discovered that when the platform succeeded in lowering the quantity of that content material, the consumer that had amplified it probably the most each decreased their participation total but in addition elevated their stage of boosting of the hateful content material.

Now I nonetheless reserve doubts in regards to the research’s methodology. It used a Google algo to find out what was abusive content material, right here hostile speech directed at India’s Muslim inhabitants. Google’s algos made a whole botch of figuring out offensive textual content at Bare Capitalism (together with making an attempt to censor a put up by Sethi himself, cross posted at Bare Capitalism), to the diploma that when challenged, they dropped all their complaints. Perhaps this algo is best however there may be trigger to surprise with out some proof.

What I discover a bit extra distressing is Sethi touting BlueSky as a much less noxious social media platform for having guidelines for limiting content material viewing and sharing that align to a good diploma with the findings of the research. Sethi contends that BlueSky represents a greater compromise between notions of free speech and curbs on hate speech than discovered on present massive platforms.

I’ve hassle with the concept BlueSky is much less hateful based mostly on the appalling remedy of Jesse Singal. Singal has attracted the ire of trans activists on BlueSky for merely being even-handed. That included falsely accusing him of publishing non-public medical information of transgender youngsters. Quillette rose to his protection in The Marketing campaign of Lies In opposition to Journalist Jesse Singal—And Why It Issues. That is what occurred to Singal on BlueSky:

This second spherical was prompted by the truth that I joined Bluesky, a Twitter various that has a base of hardened far-left energy customers who get actually mad when people they dislike present up. I rapidly grew to become the single most blocked account on the location and, petrified of second-order contamination, these customers additionally developed instruments to permit for the mass-blocking of anybody who follows me. That method they gained’t must face the specter of seeing any content material from me or from anybody who follows me. A really protected house, finally.

However that hasn’t been sufficient: They’ve additionally been aggressively lobbying the location’s head of belief and security, Aaron Rodericks, as well me off (right here’s one instance: “you asshole. you asshole. you asshole. you asshole. you need me useless. you need me fucking useless. i guess you’ll block me and I’ll move proper out of existence for you as quick as i entered it with this put up. I’ll be buried and also you gained’t care. you like your buddy singal a lot it’s sick.”). Many of those complaints come from individuals who appear so extremely dysregulated they might have hassle efficiently patronizing a Waffle Home, however as a result of they’re so lively on-line, they will have a real-world affect.

So, not content material with merely blocking me and blocking anybody who follows me, and screaming at individuals who refuse to dam me, they’ve additionally begun recirculating each damaging rumor about me that’s been posted on-line since 2017 or so — and there’s a wealthy again catalogue, to make certain. They’ve even launched a brand new one: I’m a pedophile. (Sure, they’re actually saying that!)

Thoughts you, that is solely the primary part of an extended catalogue of vitriolic abuse on BlueSky.

IM Doc didn’t give a lot element, however a bunch of medical doctors who have been what one may name heterodox on issues Covid went to BlueSky and rapidly returned to Twitter. They have been apparently met with nice hostility. I hope he’ll elaborate additional in feedback.

Another excuse I’m leery of restrictions on opinion, even those who declare to be primarily designed to curb speech, is the way in which that Zionists have succeeded in getting many governments to deal with any criticism of Israel’s genocide or advocacy of BDS to attempt to test it as anti-Semitism. Strained notions of hate are being weaponized to censor criticism of US insurance policies.

So maybe Sethi will take into account the rationale that BlueSky seems extra congenial is that some customers interact in extraordinarily aggressive, as in usually hateful, norms enforcement to crush the expression of views and data in battle with their ideology. I don’t take into account that to be an enchancment over the requirements elsewhere.

By Rajiv Sethi, Professor of Economics, Barnard School, Columbia College &; Exterior Professor, Santa Fe Institute. Initially revealed at his website

The regular drumbeat of social media posts on new analysis in economics picks up tempo in the direction of the top of the yr, as interviews for college positions are scheduled and candidates attempt to attract consideration to their work. A few years in the past I got here throughout a fascinating paper that instantly struck me as having main implications for the way in which we take into consideration meritocracy. That paper is now below revision at a flagship journal and the lead writer is on the school at Tufts.

This yr I’ve been on the alert for work on polarization, which is the subject of a seminar I’ll be educating subsequent semester. One particularly fascinating new paper comes from Aarushi Kalra, a doctoral candidate at Brown who has performed a large-scale on-line experiment in collaboration with a social media platform in India. The (unnamed) platform resembles TikTok, which the nation banned in 2020. There are actually a number of apps competing on this house, from multinational offshoots like Instagram Reels to homegrown options similar to Moj.

The platform on this experiment has about 200 million month-to-month customers and Kalra managed to deal with about a million of those and observe one other 4 million as a management group.1 The remedy concerned changing algorithmic curation with a randomized feed, with the aim of figuring out results on publicity and engagement involving poisonous content material. Specifically, the writer was within the viewing and sharing of fabric that was categorized as abusive based mostly on Google’s Perspective API, and was particularly focused at India’s Muslim minority.

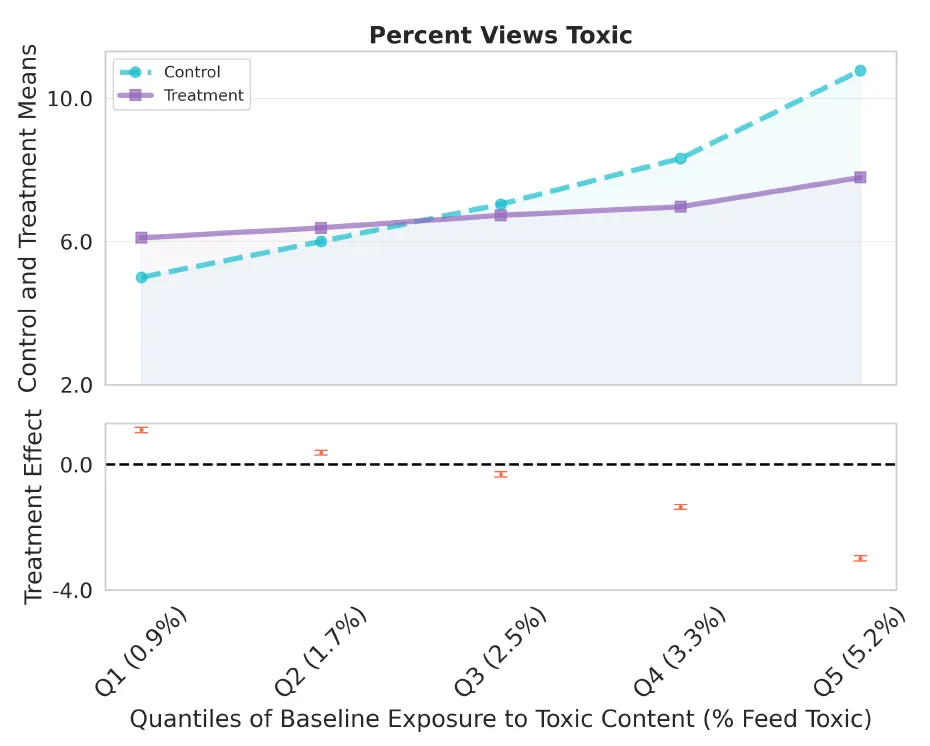

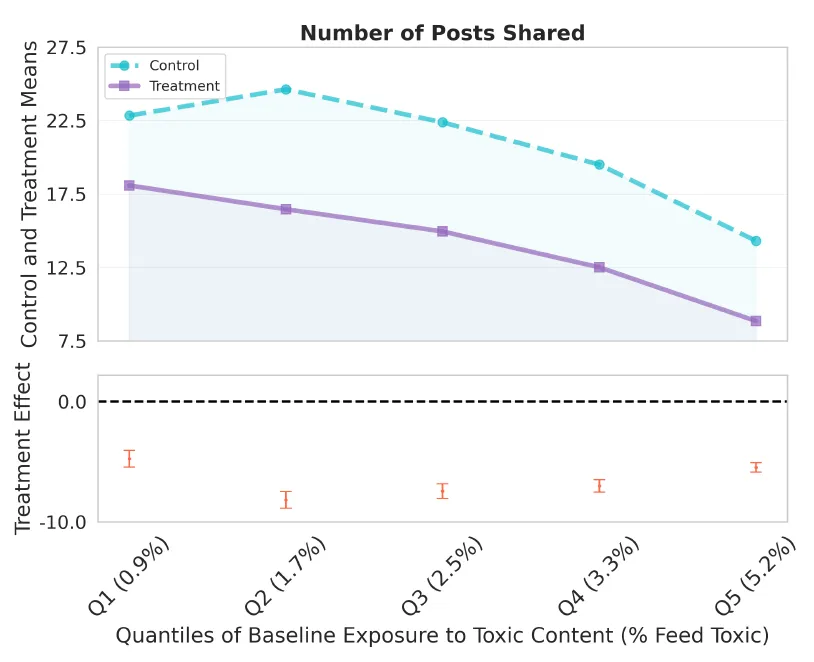

The outcomes are sobering. These within the handled group who had beforehand been most uncovered to poisonous content material (based mostly on algorithmic responses to their prior engagement) responded to the discount in publicity as follows. They lowered total engagement, spending much less time on the platform (and extra on competing websites, based mostly on a subsequent survey). However additionally they elevated the speed at which they shared poisonous content material conditional on encountering it. That’s, their sharing of poisonous content material declined lower than their publicity to it. In addition they elevated their lively seek for such materials on the platform, thus ending up considerably extra uncovered than handled customers who have been least uncovered at baseline.

Now one may argue that switching to a randomized feed is a really blunt instrument, and never one which platforms would ever implement or regulators favor. Even those that have been most uncovered to poisonous content material below algorithmic curation had feeds that have been predominantly non-toxic. As an illustration, the proportion of content material categorized as poisonous was about 5 p.c within the feeds of the quintile most uncovered at baseline—the rest of posts catered to other forms of pursuits. It isn’t shocking, due to this fact, that the intervention led to sharp declines in engagement.

You possibly can see this very clearly by trying on the quintile of handled customers who have been leastuncovered to poisonous content material at baseline. For this set of customers, the change to the randomized feed led to a statistically vital improve in publicity to poisonous posts:

Supply: Determine 1 in Kalra (2024)

These customers have been refusing to interact with poisonous content material at baseline, and the algorithm accordingly averted serving them such materials. However the randomized feed didn’t do that. Consequently, even these customers ended up with considerably decrease engagement:

In precept, one may think about interventions that degrade the consumer expertise to a lesser diploma. The writer makes use of model-based counterfactual simulations to discover the results of randomizing solely a proportion of the feed for chosen customers (these most uncovered to poisonous content material at baseline). That is fascinating, however present moderation insurance policies often goal content material slightly than customers, and it may be value exploring the results of suppressed or decreased publicity solely to content material categorized as poisonous, whereas sustaining algorithmic curation extra usually. I feel the mannequin and knowledge would permit for this.

There may be, nevertheless, an elephant within the room—the specter of censorship. From a authorized, political, and moral standpoint, that is extra related for coverage choices than platform profitability. The concept individuals have a proper to entry materials that others might discover anti-social or abusive is deeply embedded in lots of cultures, even when it’s not all the time codified in legislation. In such environments the suppression of political speech by platforms is understandably considered with suspicion.

On the identical time, there isn’t a doubt that conspiracy theories unfold on-line can have devastating actual results. One strategy to escape the horns of this dilemma could also be by way of composable content material moderation, which permits customers a variety of flexibility in labeling content material and deciding which labels they wish to activate.

This appears to be the method being taken at Bluesky, as mentioned in an earlier put up. The platform provides individuals the flexibility to hide an abusive reply from all customers, which blunts the technique of disseminating abusive content material by replying to a extremely seen put up. The platform additionally permits customers to detach their posts when quoted, thus compelling those that wish to mock or ridicule to make use of (much less efficient) screenshots as an alternative.

Bluesky is at the moment experiencing some severe rising pains.2 However I’m optimistic in regards to the platform in the long term, as a result of the flexibility of customers to fine-tune content material moderation ought to permit for a variety of expertise and insulation from assault with out a lot want for centralized censorship or expulsion.

It has been fascinating to look at whole communities (similar to educational economists) migrate to a distinct platform with content material and connections saved largely intact. Such mass transitions are comparatively uncommon as a result of community results entrench platform use. However as soon as they happen, they’re onerous to reverse. This offers Bluesky a little bit of respiratory room as the corporate tries to determine the way to deal with complaints in a constant method. I feel that the platform will thrive if it avoids banning and blocking in favor of labeling and decentralized moderation. This could permit those that prioritize security to insulate themselves from hurt, with out silencing probably the most controversial voices amongst us. Such voices often prove, on reflection, to have been probably the most prophetic.